CS 7641 Midterm Project Report(Team 04)

Anirudh Prabakaran, Himanshu Mangla, Shaili Dalmia, Vaibhav Bhosale, Vijay Saravana Jaishanker

Proposal Link

Introduction/Background:

“You are what you stream” - Music is often thought to be one of the best and most fun ways to get to know a person. Spotify with a net worth of over $26 Billion is reigning the music streaming industry. Machine Learning is at the core of their research and they have invested significant engineering effort to answer the ultimate question “What kind of music are you into?”

There is also an interesting correlation between music and user mood. Certain types of music can be attributed to certain moods among people. This information can be potentially exploited by ML researchers, to understand more about their users.

The use of Machine Learning to retrieve such valuable information from music has been well-explored in academia. Much of the highly cited prior work [1,2] in this area dates to over two decades ago when significant work was done through pre-processing raw audio tracks for features like timbre, rhythm and pitch among others.

The advent of machine learning has created new opportunities in music analysis through the visualizations of audio achieved through spectral transformations. This has opened the frontiers to apply the knowledge developed for image classification into this problem.

Problem Definition:

In this project, we want to compare music analysis done using the audio metadata based approach to the spectral image content based approach using multiple machine learning techniques such as logistic regression, decision trees, etc. We would be using the following tasks for our analysis:

- Genre Classification[1,2]

- Mood Prediction[3]

Data Cleaning and Feature Engineering

We started with two datasets for our project - the Million Song Dataset consisting of 10,000 songs and the 1.2 GB GTZAN dataset that contains 1000 audio files spanning over 10 genres.

We used a subset of the Million Song Dataset and extracted basic features from the data that could help us in identifying the song genre like tempo, time signature, beats, key, loudness, timbre, etc. We referenced the tagtraum genre annotations for the Million Song Dataset to get target genre labels to train our models. We then removed the songs which did not have a target label, thus reducing our data to around 1.9k songs.

We did some feature engineering to calculate the means of several feature values like pitch, timbre, loudness, etc, added these analytical features values to the original dataset, and dropped all rows with NaN to create a final clean dataset.

For the spectral image content based approach, we used readily available preprocessed data converted from the raw audio to mel-spectogram using the librosa library. Each track has a total of 169 features extracted.

Exploratory Data Analysis

We set out to understand more deeply the distribution of the dataset we were using. Here are some interesting tidbits that we would like to call out.

-

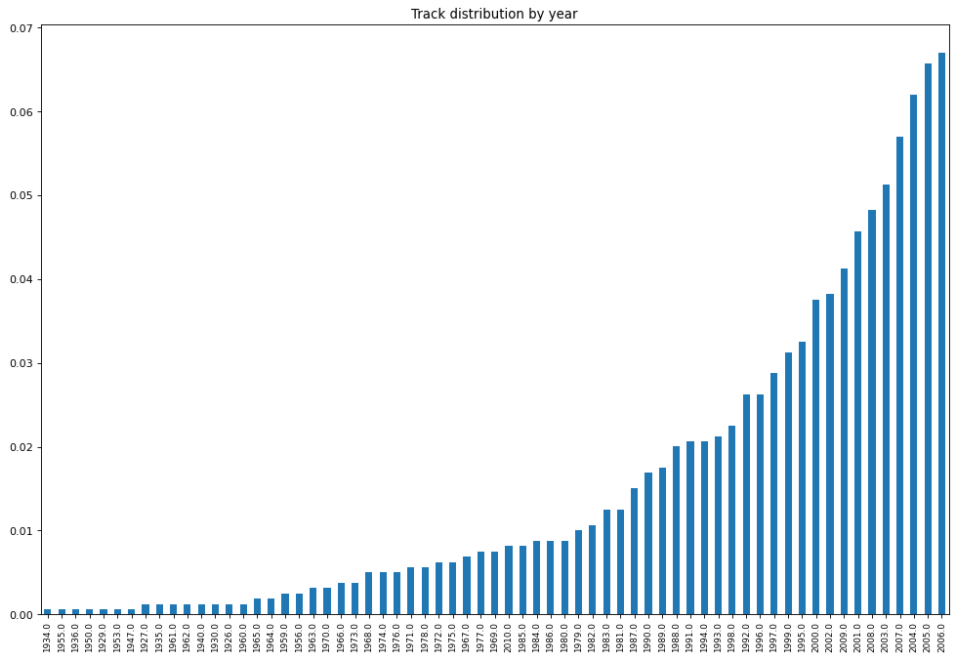

Most songs in our dataset were new (released after the 2000’s).

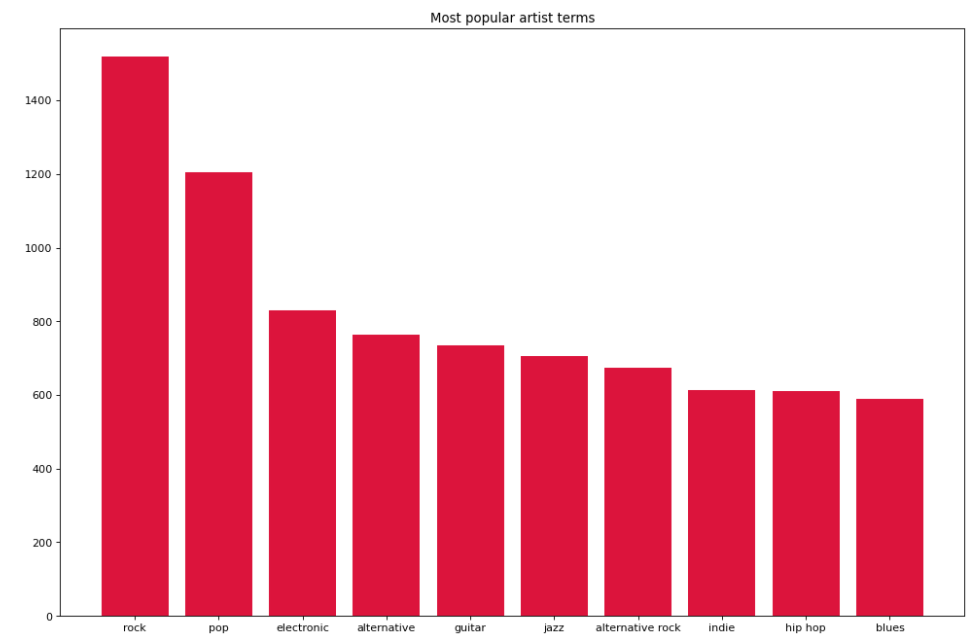

- We saw that ‘rock’ and ‘pop’ were the most popular artist terms (tags from The Echo Nest device) used to describe the songs in our dataset.

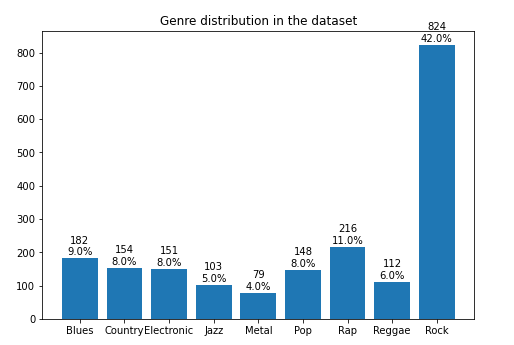

- We analysed the distribution of genres in our dataset and found that Rock is the dominating genre with 42% of songs being rock songs. All other genres are almost equally distributed with values between 4-11%, the lowest being Metal(4%) and the highest being pop(11%).

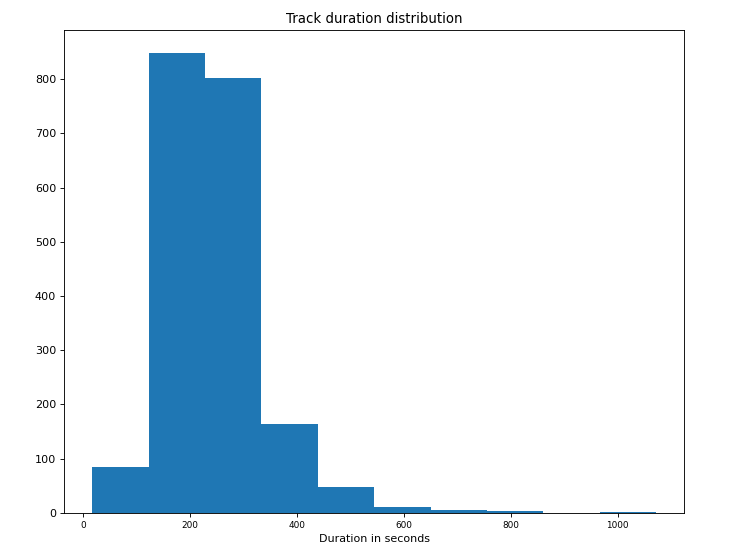

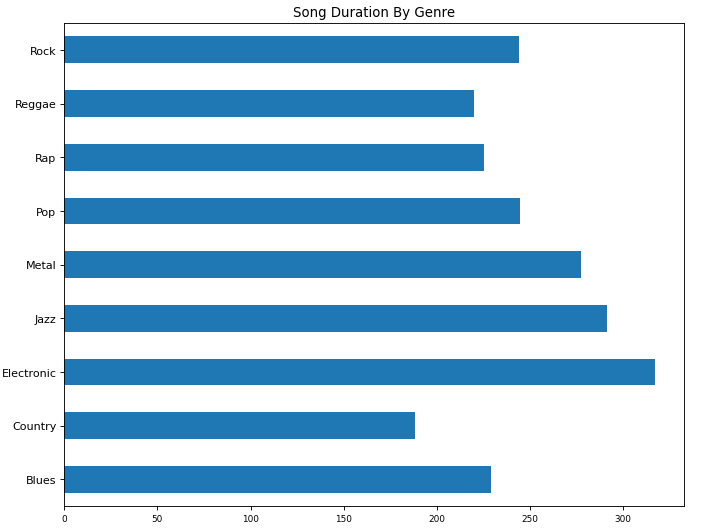

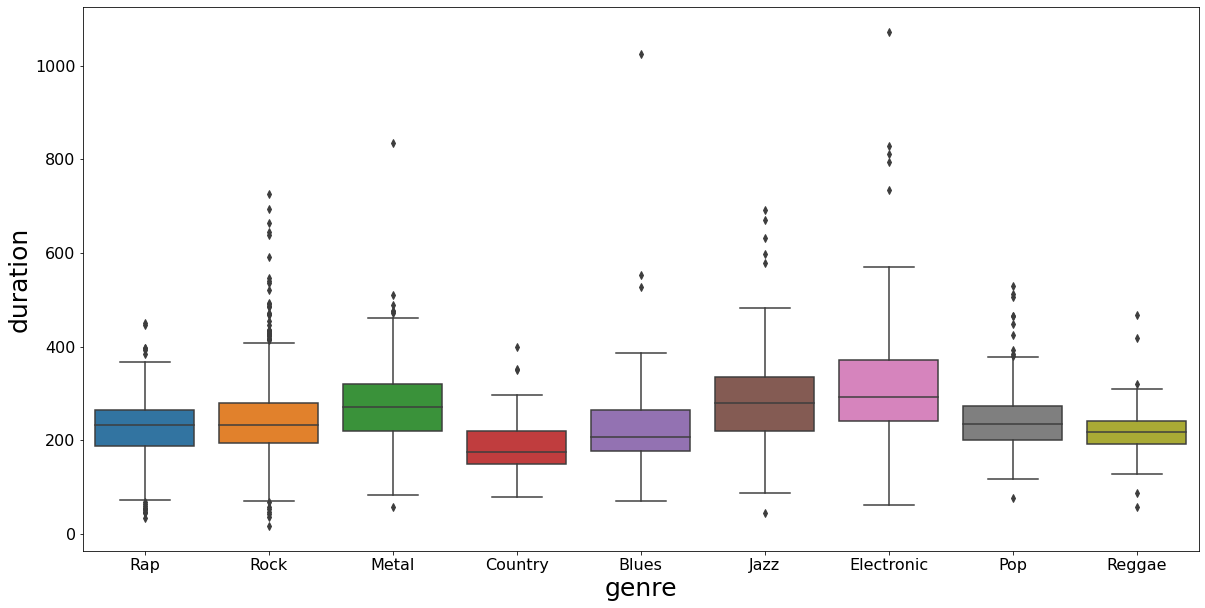

- We analysed the song durations and found that most songs were between 150-350 seconds long.

- On organizing mean song duration by genre, we found that the electronic genre tended to have longer songs and country the lowest.

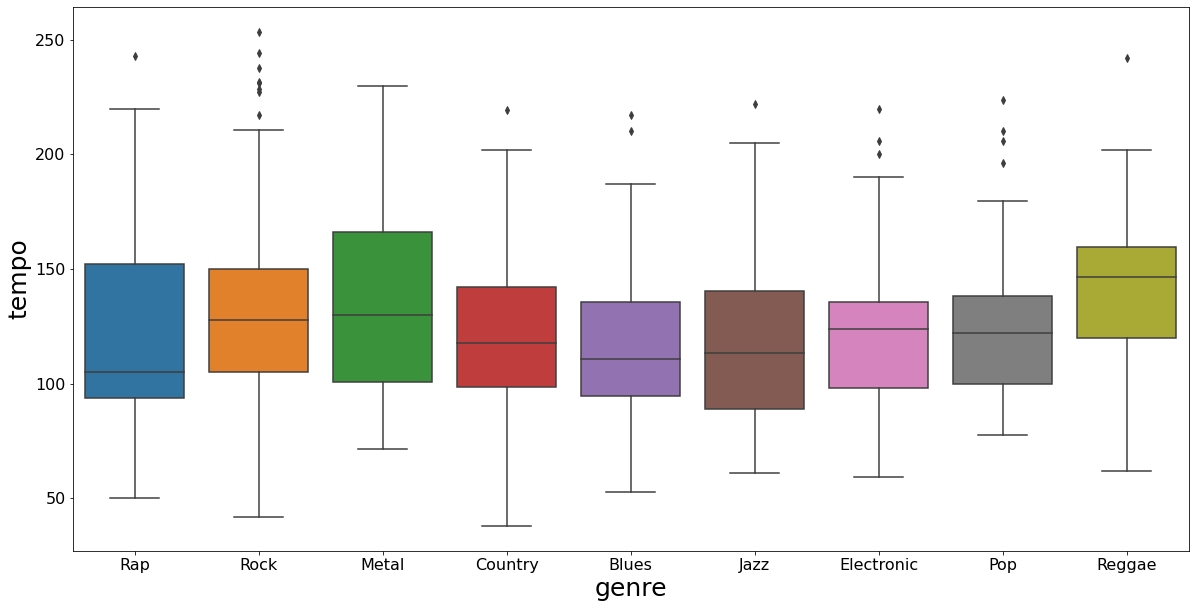

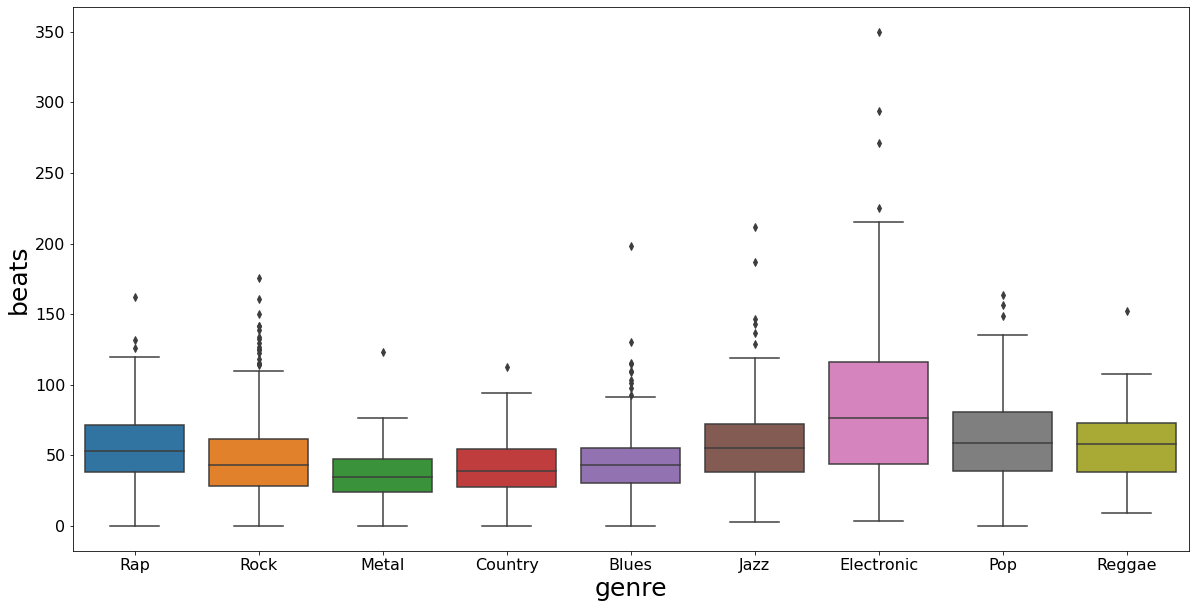

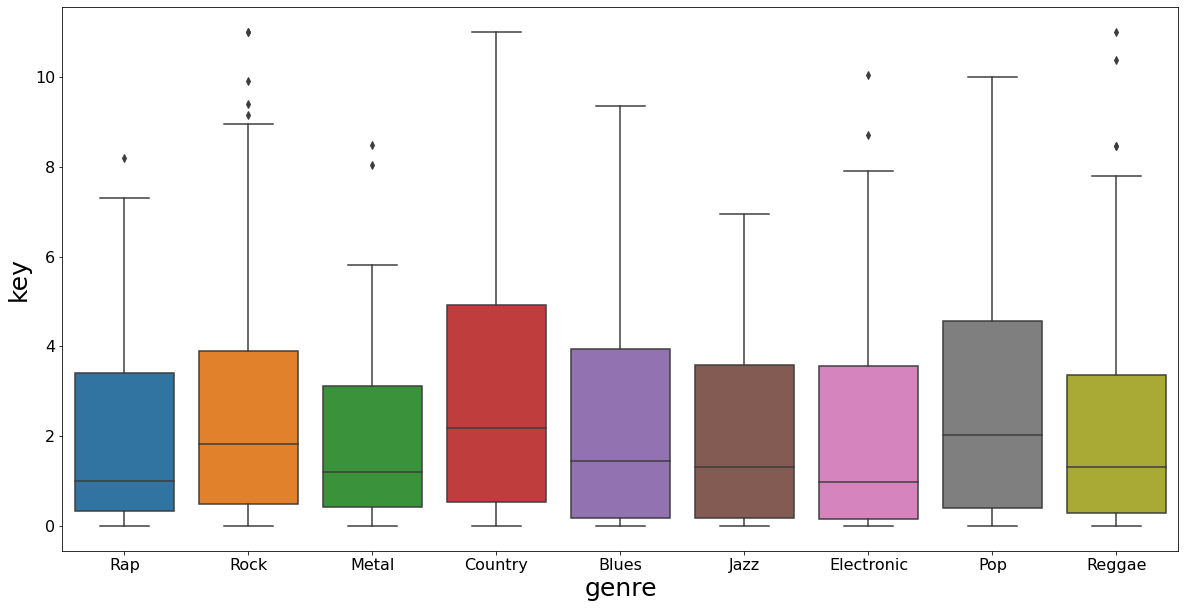

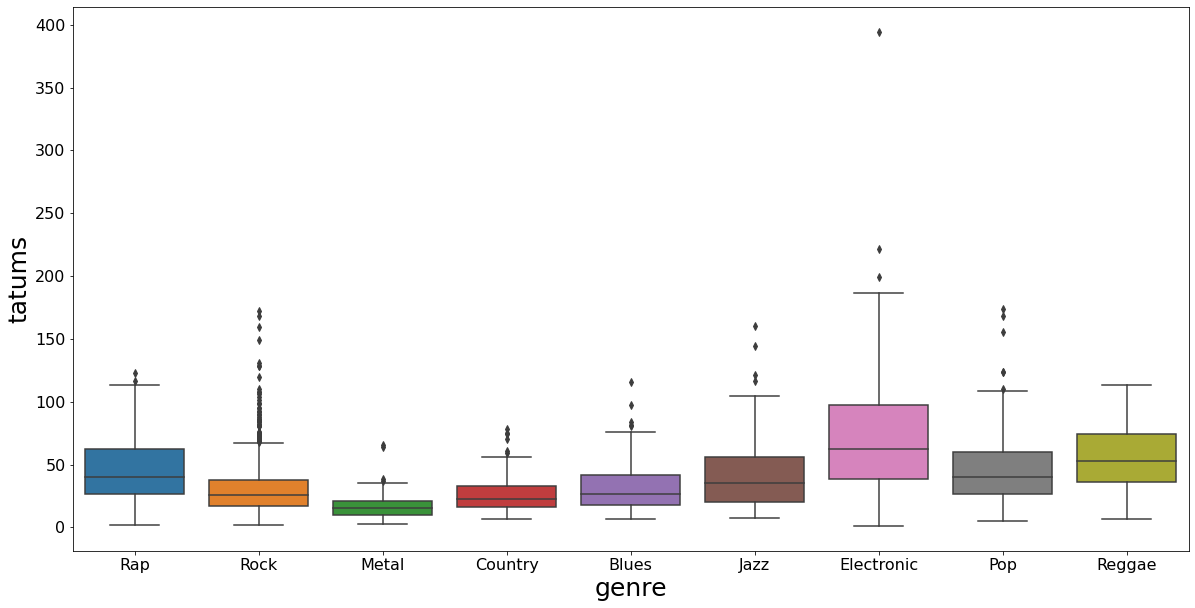

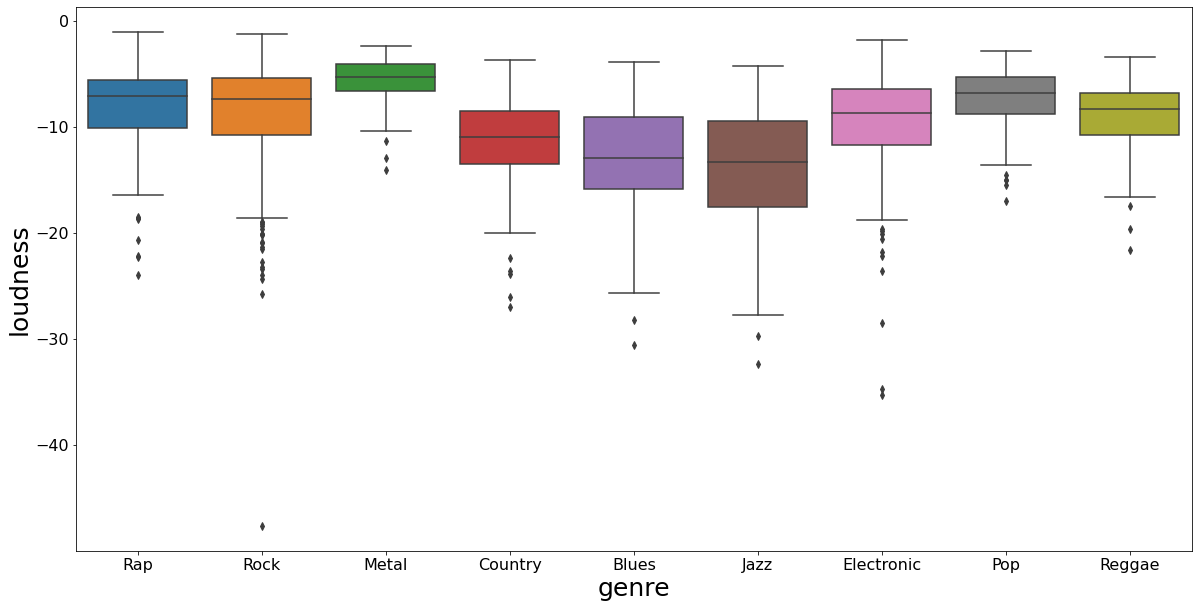

- We wanted to analyse other features for all genres too. We noticed that Reggae has a higher mean tempo, while rap had the lowest. Pop and country were in a higher key on average than other genres. The tatum (defined as the smallest time interval between successive notes in a rhythmic phrase) was highest in Electronic. Metal was highest in terms of average loudness and as discussed before, electronic and jazz tended to have longer songs.

Methods

So far, we have looked at the genre classification problem. We have tried to use both the metadata-based and spectral-imaging based approaches for genre classification.

For the metadata-based approach, we have so far used a random forest classifier, and for the spectral imaging-based approach, we have used Support Vector Machines(SVMs), Xtreme Gradient Boost(XGB) classifier, and Neural Network classifier.

Results

-

Metadata-Based

We split the dataset into training and test and ran a random forest classifier to predict target labels.

We obtained the following results:

Train Score: 0.49

Test Score: 0.52

Thus, our accuracy with the model is 52%.

We plan to also use more models like Decision Tree Classifier, Gradient Boosting Classifier, Support Vector Machine, etc and compare their performance. \ -

Spectral Imaging-Based

We used a 90-10 training and test split and obtained the following results:- SVM - 71% test score

- XGB classifier - 65% test score

- NN classifier - 20% test score

So far, as we had initially hypothesized, the spectral imaging based approach seems to be performing better than the metadata approach since it is able to take into account features that form the audio composition of the song, which is in sync with how humans have performed genre classification so far.

Reflection and Next Steps

As we had expected, we ended up spending significant amount of time in data engineering. But with that in place now, we now plan on going all out on completing the evaluation with all the remaining methods proposed by us.

We will first finish the genre classification task in the next coming week, and then start with the mood prediction problem.

References

[1] Tzanetakis, G., & Cook, P. (2002). Musical genre classification of audio signals. IEEE Transactions on speech and audio processing, 10(5), 293-302.

[2] Scaringella, N., Zoia, G., & Mlynek, D. (2006). Automatic genre classification of music content: a survey. IEEE Signal Processing Magazine, 23(2), 133-141.

[3] Delbouys, R., Hennequin, R., Piccoli, F., Royo-Letelier, J., & Moussallam, M. (2018). Music mood detection based on audio and lyrics with deep neural net. arXiv preprint arXiv:1809.07276.

[4] Kaggle Million Song Dataset : https://www.kaggle.com/c/msdchallenge

[5] Music Genre Classification | GTZAN Dataset : https://www.kaggle.com/andradaolteanu/gtzan-dataset-music-genre-classification